metadata

license: mit

language:

- en

library_name: transformers

tags:

- text-generation

- information-retrieval

- ranking

- reranking

- blockrank

- mistral

base_model: mistralai/Mistral-7B-Instruct-v0.3

datasets:

- quicktensor/blockrank-msmarco-train-10p

metrics:

- ndcg

- mrr

BlockRank-Mistral-7B: Scalable In-context Ranking with Generative Models

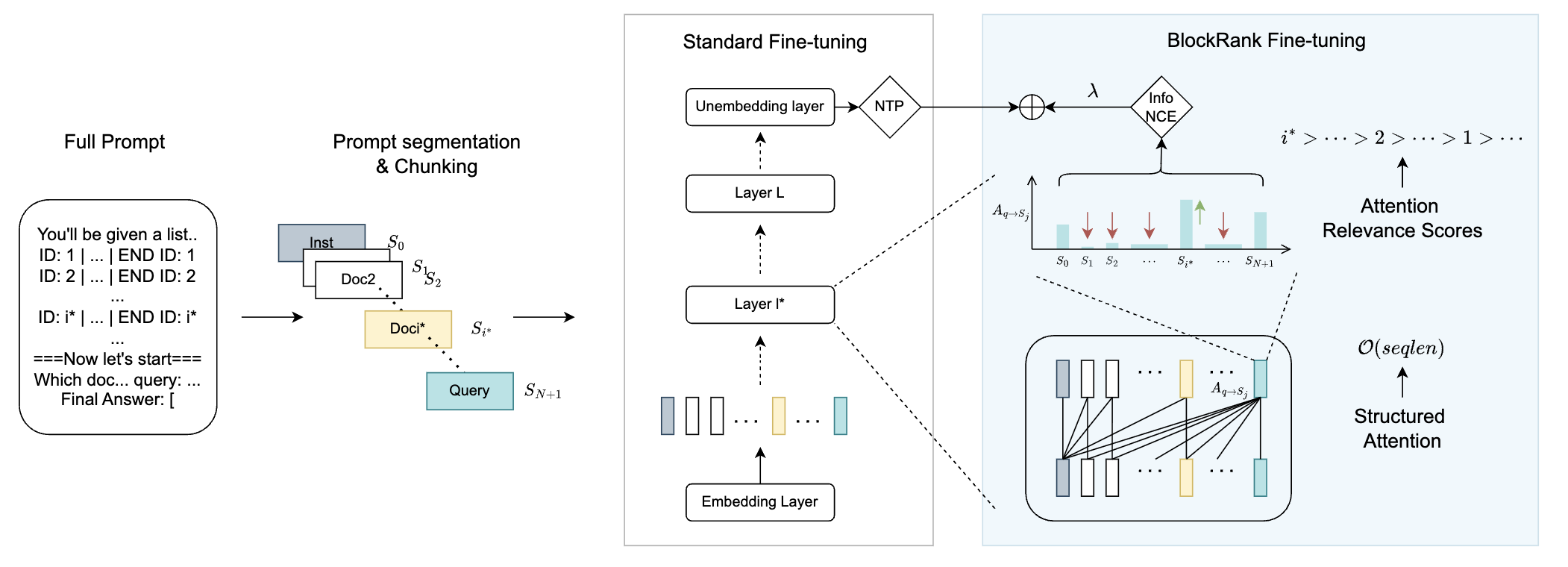

BlockRank-Mistral-7B is a fine-tuned version of Mistral-7B-Instruct-v0.3 optimized for efficient in-context document ranking. It implements BlockRank, a method that makes LLMs efficient and scalable for ranking by aligning their internal attention mechanisms with the structure of the ranking task.

Key Features

- Linear Complexity Attention: Structured sparse attention reduces complexity from O(n²) to O(n)

- 2-4× Faster Inference: Attention-based scoring eliminates autoregressive decoding

- Auxiliary Contrastive Loss: Mid-layer contrastive objective improves relevance signals

- Strong Zero-shot Generalization: SOTA performance on BEIR benchmarks

Citation

If you use this model, please cite:

@article{gupta2025blockrank,

title={Scalable In-context Ranking with Generative Models},

author={Gupta, Nilesh and You, Chong and Bhojanapalli, Srinadh and Kumar, Sanjiv and Dhillon, Inderjit and Yu, Felix},

journal={arXiv preprint arXiv:2510.05396},

year={2025}

}

Model Card Contact

For questions or issues, please open an issue on GitHub.

Additional Resources

- Paper: arXiv:2510.05396

- Code: GitHub Repository

- Dataset: HuggingFace Dataset

- Demo: Colab Notebook

License

This model is released under the MIT License. See LICENSE for details.